Defending Democracy: Combating Disinformation and AI-Driven Manipulation Through Education

In today’s digital era, democracy itself is under threat from the rapid rise of disinformation, much of it fueled by increasingly sophisticated artificial intelligence. Disinformation spreads confusion, erodes trust, fosters polarization, and undermines the informed public debate essential for democratic societies to function. Recognizing this urgent challenge, WP6 — organized by Januam gUG in Darmstadt and Frankfurt am Main, Germany — launched a series of workshops to build resilience against digital manipulation. By equipping communities with media literacy skills, critical thinking, and practical tools, these workshops took an important step in defending democracy from the dangers of misinformation and AI-driven deception.

Two Workshop Formats: Uniting Communities in Defense of Democracy

- For Employees of Social Organizations and Their Target Groups:

Professionals working in the social sector, along with the communities they support, were invited to participate in interactive sessions focused on the dangers of disinformation within digital spaces. These workshops emphasized how unchecked falsehoods can destabilize trust in institutions, damage social cohesion, and threaten democratic life. Through hands-on exercises and group discussions, participants learned to identify sources of manipulation and were encouraged to share knowledge within their networks — reinforcing collective vigilance as a pillar of democratic resilience.

- For Language Learners (Within Language Meetings):

Recognizing that social participation is the backbone of democracy, a second format targeted language learners, integrating media literacy into German language meetings. By making the content accessible and relevant, these workshops helped newcomers gain essential skills for informed civic engagement. Simplified materials, visual aids, and real-life news stories allowed participants to analyze manipulated information, ask the right questions, and enhance both their language and digital literacy — empowering them to become active, informed members of a democratic society.

The workshops were designed to be interactive, with questions incorporated throughout. It was structured around four main themes:

- What is Disinformation? Why Does It Endanger Democracy?

The workshops started by clarifying fundamental terms around information disorder and highlighting their relevance to democracy itself:

- Disinformation: Deliberately false or misleading content aimed at causing harm, often to sway public opinion or damage trust in democratic institutions.

- Misinformation: Incorrect information shared without malicious intent, which also has the potential, if unchecked, to mislead and destabilize public discourse.

- Malinformation: Factual information presented out of context to harm individuals, groups, or institutions.

Real-world examples helped illuminate how these types of information not only confuse individuals but also have profound consequences for democratic processes, such as elections and public trust in the media.

- AI and Disinformation: A Growing Threat to Democratic Societies

During the workshop, one of the most eye-opening topics we explored was the incredible ease with which artificial intelligence (AI) can now create digital content. With just a single photograph, AI tools are capable of generating realistic videos—perfectly capturing emotions and even subtle facial expressions. With only a handful of prompts, AI can produce convincing photos that are almost indistinguishable from reality.

While these advancements are astonishing, especially for industries like visual arts and filmmaking—where they enable creative works on lower budgets—they also present significant risks. Our session highlighted how easily these AI-generated visuals can be used to create disinformation. It’s not just about producing fake news anymore; AI can now generate highly believable videos and images that make fact-checking much more challenging.

Of course, some AI-generated content is still easy to identify, especially when the technology hasn’t matured or the creator is inexperienced. But on social media, the sheer speed and volume of content production, combined with the smaller screen sizes, make it much more difficult for users to spot what’s real and what’s not.

We also took a close look at statistics showing just how frequently young people use social media platforms. This data makes it clear: media literacy is now more important than ever. Being able to distinguish trustworthy information from AI-generated manipulations is a crucial skill—not only for personal protection, but for preserving the integrity of our societies as AI continues to evolve.

By fostering social media and AI literacy from a young age, we can empower individuals to navigate this rapidly changing landscape safely and confidently. This is the key to preventing the spread of misinformation—and ensuring that as technology advances, trust and truth keep pace.

- Algorithms, Filter Bubbles, Echo Chambers, Deepfakes, and Socialbots: Digital Forces Shaping Democracy

In this section of our workshop, we explored how certain social media algorithms can unwittingly set traps for users, making them more vulnerable to disinformation. Mechanisms such as filter bubbles and echo chambers play a significant role in this process. These algorithms are designed to show users content that aligns with their existing beliefs, which not only increases their exposure to misinformation but also deepens societal polarization—posing a real threat to democracy.

Research conducted on the X platform (formerly Twitter) is a striking example. It revealed that users with left-leaning views rarely encounter tweets from the right, and vice versa. This lack of exposure to diverse perspectives makes people more sensitive and reactive to opposing viewpoints, further fueling polarization and misunderstanding among different groups.

The dangers are heightened with the rise of deepfake videos and manipulated photos. When these AI-generated forgeries are first circulated on social media, they often go undetected. For example, a deepfake video of Ukrainian President Zelenskyy was initially believed to be real, spreading fear and hopelessness among the Ukrainian population.

Adding another layer of complexity are social bots, which can rapidly amplify disinformation by automatically responding and spreading content to countless users at once. The speed and scale at which these bots operate make it easier than ever for false information to go viral.

Each of these factors—algorithm-driven echo chambers, sophisticated deepfakes, and the swift spread of content via bots—can leave us all susceptible to misinformation. That’s why awareness and digital literacy are more important than ever. By understanding how these traps work, we can become more vigilant, question what we see online, and help foster a healthier, more informed digital society.

- Navigating Misinformation: Tools and Strategies to Protect Democracy

To wrap up the workshop, we examined real-life examples of disinformation—especially those generated using artificial intelligence. We explored not just the stories themselves but also their tangible impact, discussing how many people were affected and what kind of consequences these false narratives had in the real world.

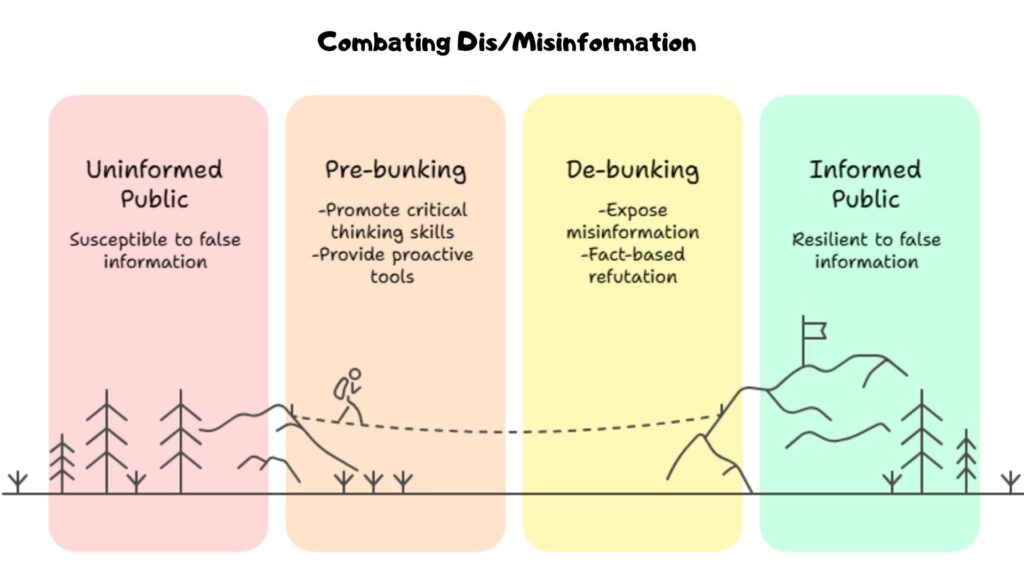

Our discussion of solutions focused on two main pillars of defense: prebunking and debunking.

Prebunking is all about building our critical thinking skills and strengthening our digital media literacy before encountering false or manipulative content. We taught participants key questions they should ask themselves whenever they come across a news item online: Who is the source? What is the language and tone of the message? What is its purpose? By considering these aspects, anyone can start to spot the red flags of disinformation early on, reducing its impact.

The second pillar, debunking, involves verifying questionable content after it has been encountered. We introduced tools like fact-checking platforms and reverse image searches, showing participants how to trace information back to its original source and confirm its authenticity. This approach empowers people to challenge and correct falsehoods they come across, not just for themselves but within their communities.

To make the learning process as effective as possible, we integrated interactive activities tailored for two different groups. For social group workers, we posed questions such as: “How can we teach these skills to the wider community?”, “What methods could be effective?”, and “How can we capture people’s attention to raise awareness about misinformation?” For language learners, we provided real examples of disinformation stories and asked them to practice their critical thinking by analyzing these news pieces, turning the exercise into a practical application of their new skills.

Through these interactive methods, participants not only learned how disinformation infiltrates our lives but also how to fight back—both for themselves and for society at large

Conclusion: Strengthening Democracy, One Empowered Citizen at a Time

In a world where disinformation and AI-powered manipulation pose severe risks to the very foundations of democracy, empowerment through media literacy and education has never been more vital. The WP6 workshops in Darmstadt and Frankfurt am Main did more than just teach practical skills — they rallied communities to safeguard their own democratic institutions by thinking critically, staying informed, and supporting open dialogue. Every participant left better equipped not only to resist falsehoods but to defend the democracy we all share.

By fostering reflection, dialogue, and collective action, these workshops provided essential tools for preserving the health and strength of democratic society in the digital age.

All workshops were conducted in German, with content tailored to be as accessible and inclusive as possible for every participant.